Chesterton's Fence, and Monkeys

Toby Irvine

It can be hard to know how to change your ways of working to be more secure. Here we look at the types of security controls and what monkeys can teach us about processes.

Chesterton’s Fence, and Monkeys

G. K. Chesterton—writer; modernist philosopher; friend to George Bernard Shaw, H. G. Wells and Bertrand Russell but, most importantly, author of a piece I can torture into an article on security.

Chesterton’s fence

In the matter of reforming things, as distinct from deforming them, there is one plain and simple principle; a principle which will probably be called a paradox. There exists in such a case a certain institution or law; let us say, for the sake of simplicity, a fence or gate erected across a road. The more modern type of reformer goes gaily up to it and says, ‘I don’t see the use of this; let us clear it away.’ To which the more intelligent type of reformer will do well to answer: ‘If you don’t see the use of it, I certainly won’t let you clear it away. Go away and think. Then, when you can come back and tell me that you do see the use of it, I may allow you to destroy it.’

Simply by reading my articles you’ve clearly proven you’re the more intelligent type of reformer. So let’s get on our philosophical high-horse and make some logical leaps.

When evolving your approach to security you’re going to be moving capabilities, and responsibilities, around to make them more effective and scale better. In doing this, you’re going to encounter fences across the road, as described by Chesterton, and have to do something about them. Some of these fences will initially appear quite inscrutable.

The security-minded amongst you will already be thinking that the fence, like all fences, is a control. Security-minded people think a lot about controls, but not many other people do, so the first step in “seeing the use of it” is to define it:

Security controls are safeguards or countermeasures to avoid, detect, counteract, or minimize security risks to physical property, information, computer systems, or other assets. Wikipedia

So an actual fence is a physical security control—like a door or a lock. We’ve got a philosophical fence here, however, which can be whatever we want it to be. Far more useful for laying out a thesis on security, almost completely useless for keeping people out of your garden.

To understand the control that we’re deciding what to do with, we have to categorise it and there are a couple of useful ways in which we can do that. Firstly, we can categorise them by how they act:

- Preventative controls are intended to prevent an incident occurring (Like a fence! Or, say, a git pre-commit hook that checks for passwords/API keys about to be committed to a repository)

- Detective controls are intended to identify and characterise an incident in progress (a script that constantly scans all your repositories for anything that looks like a password/API key)

- Corrective controls are intended to limit the extent of any damage caused by the incident (immediately rotating passwords/API keys, googling how to expunge commits from a git history because you did it that one time back in 2016 and cannot remember how)

That’s all by the book and gets us some way towards understanding the control before we reform the heck out of it. We’re going to need to go deeper to fully grok it, however, so I propose an additional way of categorising controls that should really help our decision-making process.

This categorisation has an order and helps us not only understand a control but gauge its quality, too. Let’s go from “best” to “worst”…

1. Controls in response to a defined threat

The best controls are a direct response to a defined threat. This makes their purpose clear and means we can easily determine their effectiveness. An example:

If, as an organisation, you’ve decided that while building your applications you’re concerned about an insider threat of someone inserting malicious code into your systems then you’ll write that down in your threat model and think about controls to prevent that happening.

A scalable, and often used, control is for all code changes to be peer-reviewed by someone else before going out to production. Now any attempt from the inside to subvert your systems is going to have to be a conspiracy of two people, which is much harder to coordinate. For critical systems you may extend that to being reviewed by two other people, now we’re in a fantasy land where we have to get three developers to agree with each other which is clearly impossible.

These are the best type of controls. We’ve got a documented rationale that says, “This is why we do this”. If we’re going to make changes to the process then we know exactly what the original intent was and can ensure that any change only improves things.

2. Controls dictated by external compliance

Sometimes controls are imposed on you. When your organisation’s activities fall under a regulatory regime (and, with GDPR, that’s basically every organisation now) you never have the threat model that the standard’s authors were working with. Sometimes it’s clear from the standard what the threat being mitigated is, often it means that instead of, “This is why we do this”, the best we can say is, “We have to do this, we don’t know why”.

Adam Shostack had quite enough of this last year and reverse engineered a threat model for the PCI DSS standard. He wrote up his thoughts on the problem with security standards and I couldn’t agree more. Standards authors should publish explicit threat models alongside the standard to aid in understanding and enable everyone to make improvements to their approach to meeting the standard with confidence.

Charitably, you could put this down to a simple omission from the published standards but parts of them are best described as my next category.

3. Controls that are security hygiene

These are the things that everyone does. Controls that are not given much thought and are pretty pervasive across the tech industry. Things like, if a system in use has administrator-level functionality of some kind then admin privileges are generally not given to everyone on the team. Or that teams’ data is regularly backed up somewhere (Is this a security control? Yep! There’s a reason we have the A in the CIA triad). We might not be able to point to a standard or a particular threat but these controls are helping to prevent some issues.

Like washing your hands after going to the bathroom, everyone does these things (well, almost everyone but… don’t be that guy) and they’re important, but we don’t pay them much attention. This is the danger they pose for the determined reformist—we might simply not notice they’re there. In the process of moving things around, we might undermine some of these security hygiene controls so extra effort is needed to see them in the first place.

4. Controls that make you feel secure

We’re getting down to some pretty low-quality security controls now. This type of control is frequently called “Security Theatre” but I like to think of them as “Emotional Security” because there’s some value in that.

An emotional security control might be a selective CAB, as I’ve described in a previous article. There’s an approval board with security expertise on it and that’s giving the organisation comfort. The fact that only 4% of the change flowing to the IT systems is being reviewed by the board is an implementation detail everyone’s happily unaware of.

In our evaluations, it’s always worth suspecting that a control might be simply for emotional security. If you can’t connect it to a threat, or you can but it’s clear that the control does nothing at all to effectively minimise that threat then we can definitely improve things by changing the control or even removing it completely.

I was told there would be monkeys?

Yes. I am totally getting to those. Nearly there!

5. Mystery controls

Nobody knows where they came from or why we’re doing them. You’re looking at this fence and thinking, “This is a perfectly nonsense fence!”. But the apparent complete nonsense is really worrying you—the fence has been there for a long time. Surely it must have a reason.

In the 2010 book ReWork by DHH and Jason Fried, Jason says that,

Policies are organizational scar tissue. They are codified overreactions to situations that are unlikely to happen again. They are collective punishment for the misdeeds of an individual. This is how bureaucracies are born. No one sets out to create a bureaucracy. They sneak up on companies slowly. They are created one policy—one scar—at a time. So don’t scar on the first cut. Don’t create a policy because one person did something wrong once. Policies are only meant for situations that come up over and over again.

Which he’d tweeted previously in 2009. And this is one potential explanation for a mystery control - a codified overreaction to a previous situation that’s unlikely to happen again.

I propose that mystery controls can be even more insidious than this. Not even requiring a rare but meaningful event, simply a change in your organisation’s environment over time.

Let me tell you a story about monkeys.

Yes!

Now, I must stress that you do not try this at home. This is a thought experiment about monkeys that can help us reason about something, useful in much the same way as the philosophical fence. Real monkeys, however, are extremely problematic and not at all as useful as a real fence.

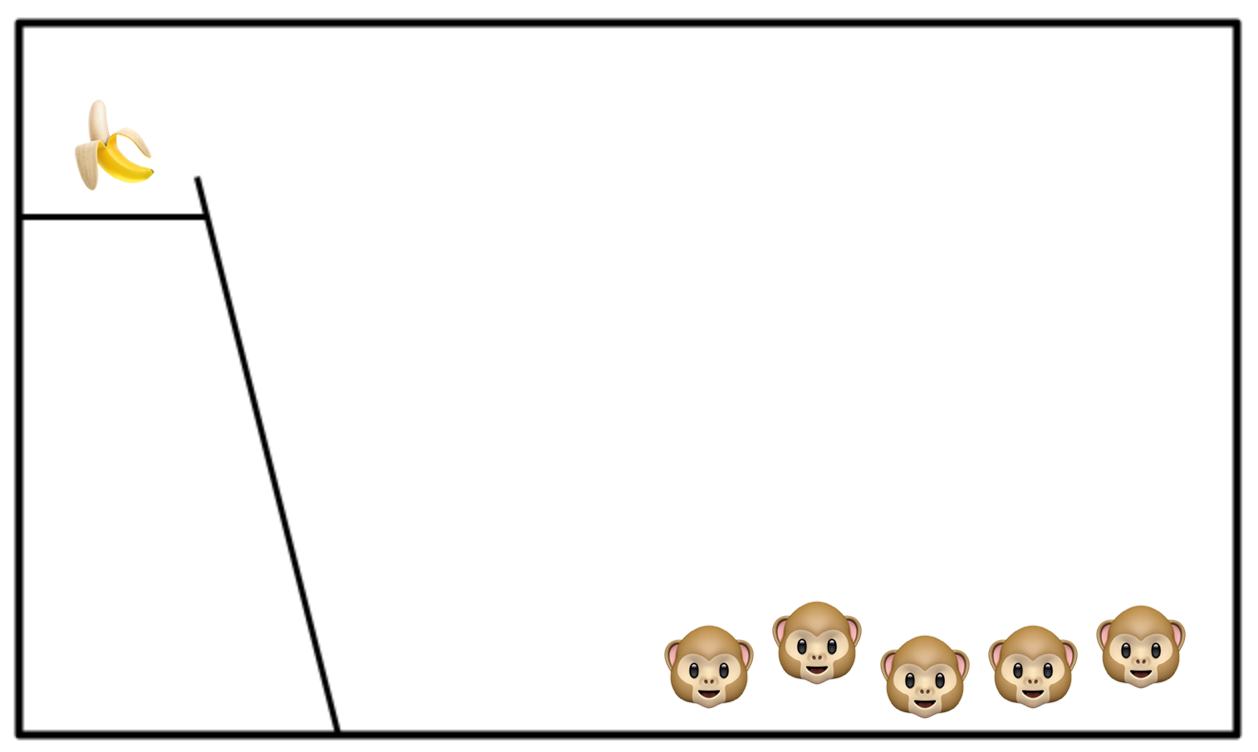

With that out of the way, for this thought experiment we’re going to need five monkeys in a cage.

As you can see, the cage has a ladder going up to a high platform and we’ve placed a banana on the platform. Eventually one of the monkeys is going to notice the banana and head for the ladder to go get it.

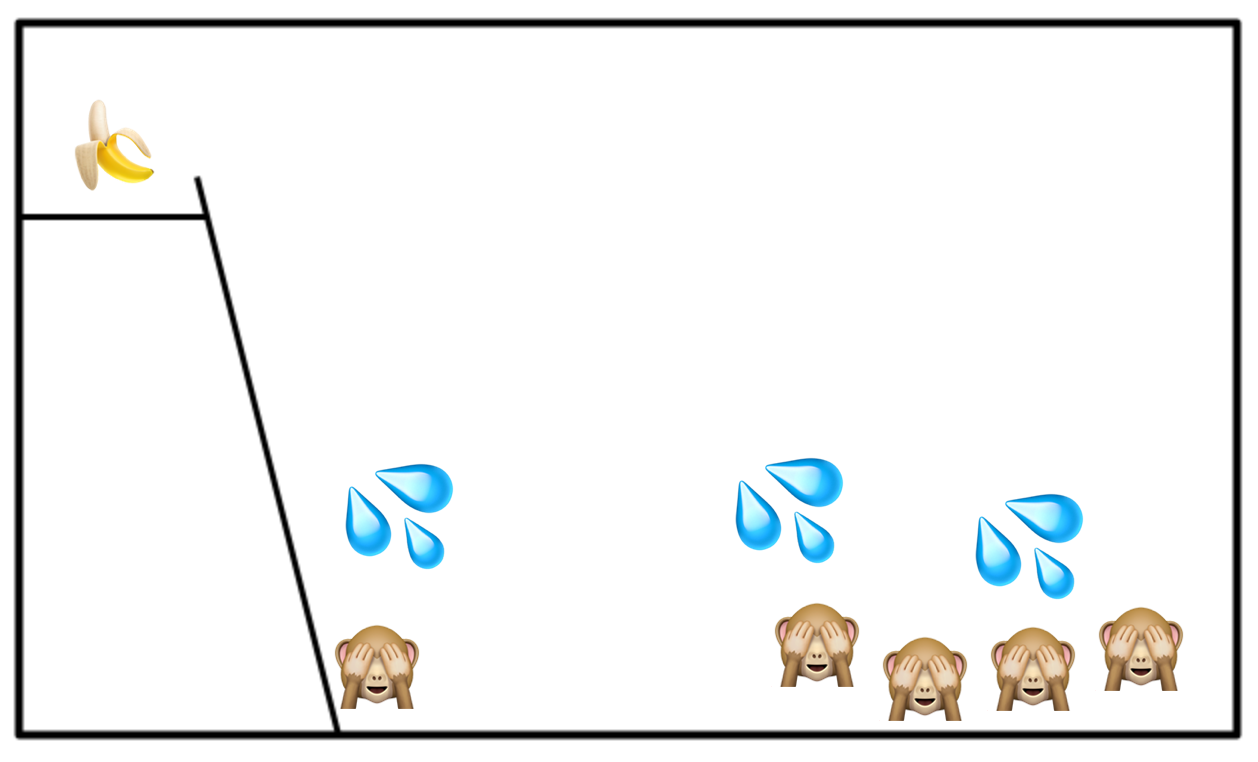

As soon as this monkey touches the ladder you hose down all the monkeys with cold water.

AGAIN, thought experiment monkeys. Not real monkeys. I have not put this experiment to the board of ethics. Not even a philosophical board of ethics.

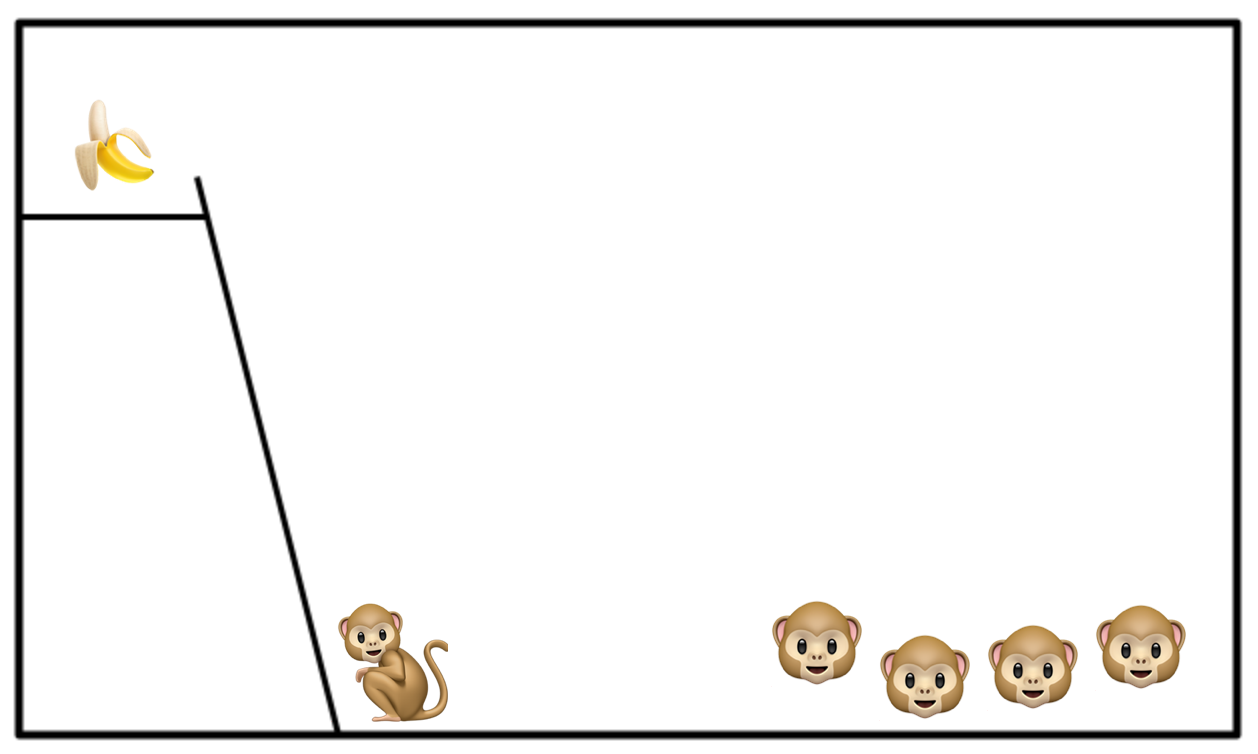

It may take a few hosing downs to establish clear cause and effect for the monkeys but eventually they’ll give up on the banana. Now we can swap out one of the monkeys for a fresh monkey. Fortunately, we have a healthy stock of monkeys.

This monkey has never been hosed down with cold water. In fact, go ahead and put the hose away you won’t be needing it again, thankfully. The new monkey will see the banana and get quite excited. They’ll head for the ladder, at which point the other monkeys will beat the snot out of him. They don’t want to get hosed down again.

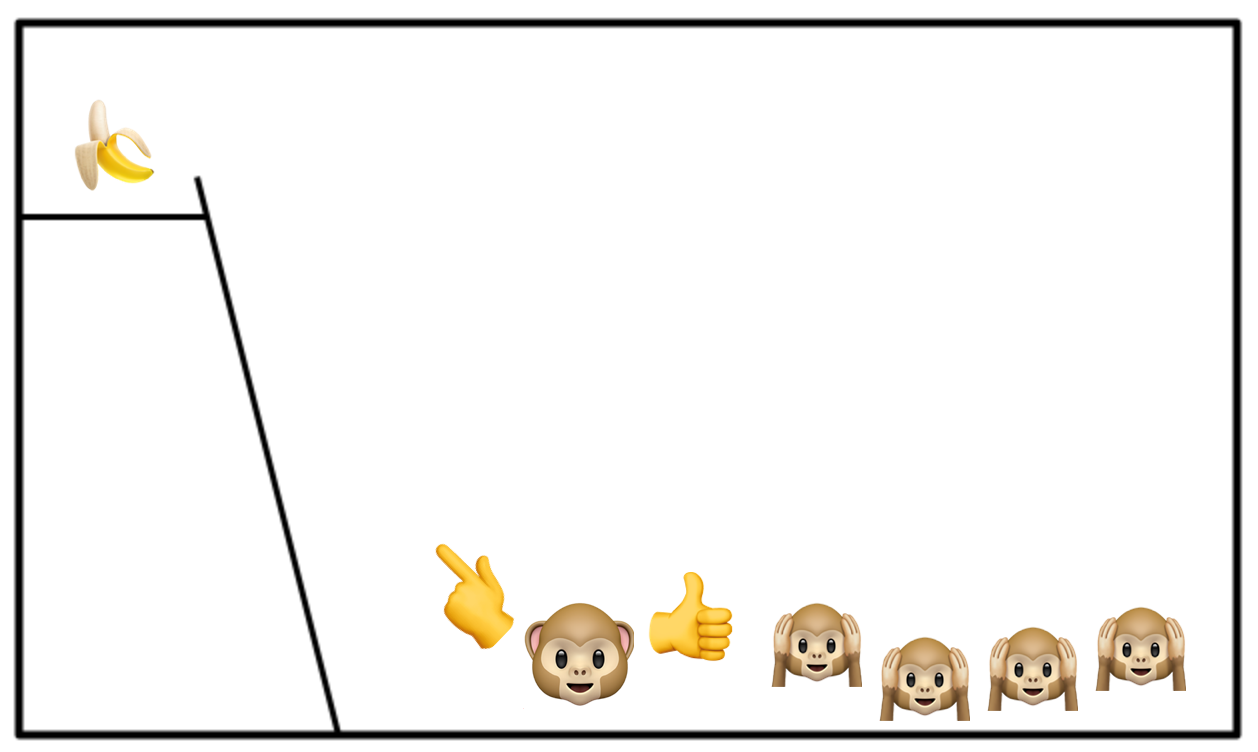

The new monkey will be very confused by this but will learn quickly not to touch the ladder. Now we swap out another of the original monkeys for a fresh monkey. This new monkey will also see the banana, get quite excited and head for the ladder. The other monkeys will again beat the snot out of them and, crucially, the first new monkey will join in with them. He got beaten for touching the ladder and he’s going to make sure that this new guy does too.

Go ahead and keep swapping out the original monkeys one by one until there are none of them left. Now you have a monkey organisation where nothing bad, apart from getting beaten by the other monkeys, has ever happened to a single monkey for trying to eat the banana. Yet anyone who tries to do so will be soundly beaten.

And if you could ask the monkeys why this was so, and they could tell you, they would say:

That’s just the way we’ve always done things around here

.

.

.

I’ll end on one more quote from G. K. Chesterton, this time writing about George Bernard Shaw:

If man, as we know him, is incapable of the philosophy of progress, Mr. Shaw asks, not for a new kind of philosophy, but for a new kind of man. It is rather as if a nurse had tried a rather bitter food for some years on a baby, and on discovering that it was not suitable, should not throw away the food and ask for a new food, but throw the baby out of the window, and ask for a new baby.

And I’ll leave you to your own philosophies on that. Thanks for reading!

Shameless plug: Secure Delivery specialises in getting scaleable, modern application security practices embedded into application delivery. Get in touch with us to find out more about how we can raise your organisation’s security capabilities to ensure your teams are building the high-quality, secure systems you need, at pace and scale.

Get in touch

We'd love to hear from you. Let's start your journey to world-class secure software product delivery today!

- Address

- Secure Delivery

Office 7, 35/37 Ludgate Hill

London

EC4M 7JN - Telephone

- +44 (0) 207 459 4443

- [email protected]