Number of Data Breaches is not a Good Security Metric

Toby Irvine

It's hard to manage product security if all you have is a lagging indicator of it. Reacting to data breaches is not planning ahead. How do you know that things are being built securely?

Ok, of course it’s not. Does this feel familiar though?

- Budget gets allocated to cybersecurity

- Cybersecurity activities happen

- Everyone is reasonably happy, except the application delivery teams having cybersecurity activities done to them

- Terrible news - a data breach!

- PANIC

- Budget gets substantially increased for cybersecurity

- Substantially more cybersecurity activities happen

- Everyone is reasonably happy, except the application delivery teams having substantially more cybersecurity activities done to them

- Terrible news - a data breach!

- PANIC

That’s… not a great situation. A data breach is a trailing indicator aka a lagging indicator:

A lagging indicator is a financial sign that becomes apparent only after a large shift has taken place. Therefore, lagging indicators confirm long-term trends, but they do not predict them.

So that’s a terrible measure to base your decision making and budget allocations on. But a trailing indicator of what? We’re going to need to know to be able to get to leading indicators. Leading indicators are a brilliant measure to base your decision making and budget allocations on.

Data breaches can occur in many ways - a phishing attempt could compromise a workstation and data stolen, a laptop could be left on a train (remember when that was commonplace? Hooray for full disk encryption), an employee could walk out the door with a thumbdrive containing all your customer data. The broad field of cybersecurity is generally concerned about all of these scenarios.

You’re most likely to hit the headlines, however, with a data breach carried out over the internet and through your deployed applications. In this case we’re talking about application security and application security has become the dominant part of cybersecurity by far. Why?

Software is eating the world.

Marc Andreessen, 2011 (or in the WSJ)

As everything became software, cybersecurity became application security. Software spread out to cover more of our world and down to consume the infrastructure. Network? Some lines of config in a YAML file. Servers? Some lines of config in a YAML file, or Dockerfile, or “I don’t care about servers just run this code and store this data”. All part of the source code for your systems, all part of the application.

We’re getting closer to what a data breach is a trailing indicator of. We just need to know what application security is. So, let’s state what we mean by security in the context of applications:

Security is simply a non-functional requirement of your applications

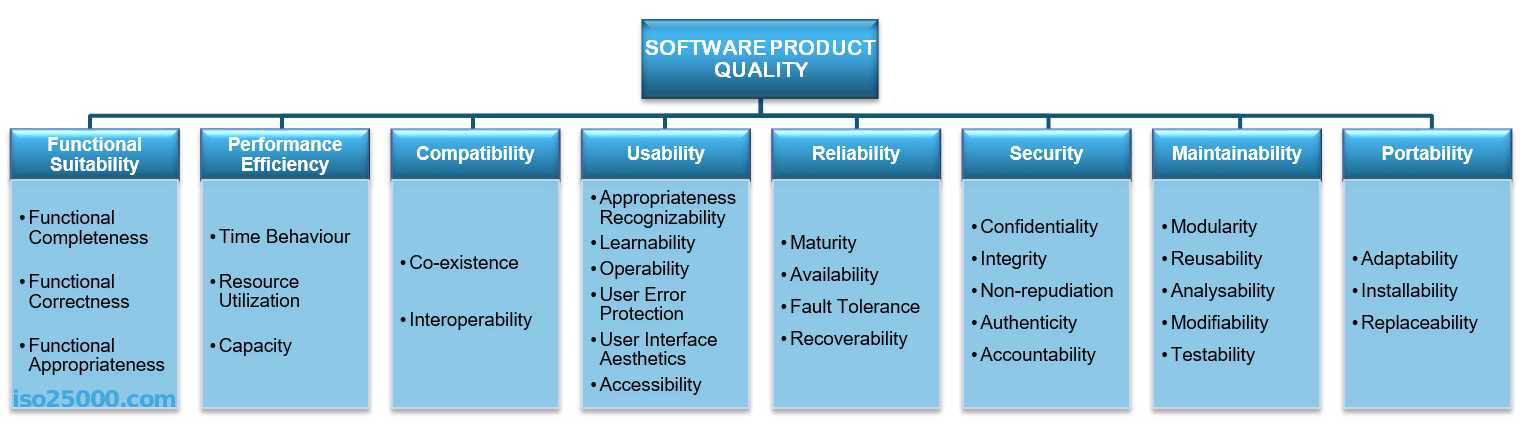

Pretty straightforward. A more holistic view is that security is a non-functional requirement of both your application and the processes used to build and operate it. But those processes are there to instill the security into your application and it carries that with it as an intrinsic quality. Now, if security is part of the quality of your application what is software quality? We’ve got a standard for that, fortunately: ISO 25000. Good, that saves us a bunch of time, let’s have a look:

The quality of a system is the degree to which the system satisfies the stated and implied needs of its various stakeholders, and thus provides value. Those stakeholders’ needs (functionality, performance, security, maintainability, etc.) are precisely what is represented in the quality model, which categorizes the product quality into characteristics and sub-characteristics.

https://iso25000.com/index.php/en/iso-25000-standards/iso-25010

Those clever folk at ISO have connected their quality model to value provided, otherwise it’s a fairly meaningless exercise. They’ve also sneakily given us a left-to-right order of importance to these characteristics of quality. Do you agree with them? Have a good think about each one, the value they provide, and the business impact of improving or worsening each one.

Higher quality applications provide more value; Applications with poor security are of lower quality; Data breaches are a trailing indicator of low software quality.

We’re thinking about application security in the right way now. It may be the futuristic year of 2020 but people are still required to program computers, so to get to leading indicators of software quality we need to study the ways that people are producing software. With another excellent bit of fortune, we have the work of Nicole Forsgren, Jez Humble and Gene Kim to help us here. Higher performing organisations derive more value from higher quality software delivered by high performing technology functions and, out of all things measured, the data analysis shows there are just four metrics that matter as leading indicators:

- Lead Time

- Deployment Frequency

- Mean Time to Restore

- Change Fail Percentage

If you’re doing well on these four metrics when delivering software then you have good, leading indicators of your organisation being high performing and having high quality software. Bear in mind you don’t need to be the best in the world overall - these metrics are relative to your competition. If your competitors have a lead time to deploy changes of a month and you can do it in a day, or even a week, you win.

What you will notice from these metrics is that functional suitability doesn’t impact any of them. You can quite happily build completely the wrong thing for your market with extremely high quality. If you like. Ensuring you’re building the right thing is a whole different article so I’ll talk about that in a whole different article.

What can and does affect the four metrics that matter are the non-functional characteristics of your system. They affect these metrics, these metrics affect them, and they affect each other in quite an interdependent way:

- A system with high reliability will be decoupled without any single points of failure so change fail percentage will improve, mean time to restore will likely be quicker and deployment frequency will be higher because the decoupled parts can be updated separately

- A system with high reliability will automatically detect abberant behaviours and terminate them, improving security

- A system with high performance efficiency will be resistant but sensitive to attempted attacks that degrade performance, improving security

- A system with a short lead time can be changed in production quickly, improving response time to security defects detected

- And many, many more

A high quality system will be more secure than a low quality one and the four metrics that matter will be very bad for a low quality system.

Aim for high quality, deliver value at pace without compromising quality, and be a high performing organisation.

Improving your non-functionals to achieve high quality means both paying attention to them and dedicating efforts to improve them alongside the functional characteristics of your system. We’ve got an order of priority for delivering value in the ISO 25010 model, set your work priorities and effort allocation appropriately. Paying attention to them means measuring them, or you’re flying blind. Some non-functional requirements are easier to measure than others - performance is straightforward, security not so.

There are measures you can put in place for security - an accurate count of security defects known in your system is a good start and at Secure Delivery we can help you with the right tools, reporting, processes and training to give you the accuracy and outcomes you need.

A final thing to notice about the four metrics that matter is that if you have unnecessary hand-offs from one team to another to determine the level of any of the quality characteristics instead of continuous responsibility then you’ve already lost. If you’re:

- Handing off to a test team to determine functional correctness

- Handing off to a security team to determine security level

- Handing off to a performance team to determine performance efficiency

- Handing off to an operations team to determine reliability

Then the delays introduced, the lack of shared context, the miscommunication and the differing incentives across the teams will negatively impact your lead time, deployment frequency, mean time to restore and change fail percentage. You will not be a high-performing organisation. The quality of your software systems will be low.

Structure your teams and your delivery process to ensure that the people responsible for delivery are also continuously responsible for the quality of that delivery.

This means all characteristics of quality, including security. Allocate your budget and effort based on improving the leading indicators of quality, not by reacting to trailing indicators. See what’s working and what’s not working long before everything goes wrong.

Move fast, deliver quality software, win in the marketplace.

If you want to know more about getting your application security under control while increasing your product delivery capabilities, talk to us at Secure Delivery. You can contact me on LinkedIn, or us via the contact details on our website.

Get in touch

We'd love to hear from you. Let's start your journey to world-class secure software product delivery today!

- Address

- Secure Delivery

Office 7, 35/37 Ludgate Hill

London

EC4M 7JN - Telephone

- +44 (0) 207 459 4443

- [email protected]